All online marketers strive to roll out campaigns and publish assets that perform well and bring ROI.

Easier said than done.

Intuition alone doesn’t cut it because, as much as we’d like to, we can (very) rarely predict customer behavior.

So, we need something more reliable to go on. We need actual facts—data.

This is where A/B testing comes into play.

In this article, we will zoom in on this research method, explore its benefits, learn how to do A/B testing, as well as go through a few best practices.

I. What Is A/B Testing?

II. A/B Testing in Modern Digital Marketing

III. The Benefits of A/B Testing

IV. How A/B Testing Works

V. The Different Types of A/B Testing

VI. Key Metrics Used in A/B Testing

VII. How to Conduct an A/B Test

VIII. Best Practices for A/B Testing

IX. How to Do A/B Testing in Creatopy

But first:

I. What Is A/B Testing?

A/B testing is the practice of comparing two slightly different versions of the same digital content by showing them to separate audience segments to see which one performs best.

A/B testing is also known as split testing. Although many use the two terms interchangeably, the former acts as an umbrella term that encompasses all types of tests that compare a minimum of two variations of something. On the other hand, split testing is a form of A/B testing that experiments with only two variations.

But more about A/B test types later.

This research method has been around for a while. Believe it or not, its principles originated in agriculture a little over 100 years ago when statistician and biologist Ronald Fisher experimented with things like using different quantities of fertilizer on his crops.

He then went on to publish a book called The Design of Experiments that expanded on the statistical methods he experimented with while working the land.

Today, you can use A/B testing in any field with measurable metrics to evaluate, even if it’s most commonly associated with user experience and digital marketing.

II. A/B Testing in Modern Digital Marketing

A/B testing is a great tool that can elevate pretty much any digital marketing strategy.

How come? Because it provides insight into customer behavior and helps marketers make informed decisions.

When you create display ads, for example, you have no idea what reactions a certain design will get. Most times, the users themselves don’t even know why they click on a particular ad or turn a blind eye to another. How can marketers predict such unpredictable behavior?

Sure, relying on assumptions and gut feeling is an option. But is it really the best approach when you can A/B test and collect data that tells you what the audience likes or dislikes?

This method can help you optimize your current campaign and use the data to make decisions about future campaigns. In short, you can take what worked in an A/B test and replicate it. This does not equal guaranteed success, especially with different audience segments, but it gives you a starting point.

What can you test in digital marketing? Well, I’m glad you asked.

Below you can find listed the main digital marketing assets you can A/B test and examples of elements to use as variables:

- Emails and newsletters (subject line, images, email layout, CTAs, and so on);

- Display ads (ad copy, images, colors, background, CTAs, and other elements);

- Social media ads (text, ad format, ad copy, and images, among others);

- Website pages (nearly any element on the page, including layout, headline, CTAs, images, etc.);

- Mobile apps (new features, frequency of push notifications, CTAs, and countless other elements);

- Video (thumbnail, CTAs, the first few seconds, total length, and the likes).

A/B tests are valuable at a marketing level but also at a business level. They’re cost-effective with high returns, giving companies access to qualitative and quantitative data.

Just think about display advertising, which can eat away at your budget when it’s not managed effectively. Instead of wasting hundreds or thousands of dollars on impressions daily, with few clicks and close to no conversions, you can do a few A/B tests, optimize according to the results, and boost ROI.

III. The Benefits of A/B Testing

If you’re not already sold on the idea that A/B testing leads to better performance, let’s dive deeper into the advantages of this research method.

More website traffic

Marketers use many tactics to draw users to their websites, including display advertising, social media advertising, and email marketing. As you’ve seen, all of these can be A/B tested to drive up the number of website visitors. Sometimes the secret to more people clicking through is a new call-to-action; other times, it’s a more persuasive ad copy or something entirely different. You never know until you test.

Better website performance

Once you bring users to your website, you want them to stay there for as long as possible. Not in a “this layout is confusing or difficult to navigate” way but in an “I really like this website, and I want to explore more” way. A/B testing can also help identify areas of your website that could use improvements, lower bounce rates, and boost its overall performance.

Increased conversion rates

Most times, A/B tests are performed with a conversion rate optimization (CRO) goal in mind. Testing different variations of your digital marketing assets can reveal what is most successful in turning users into leads and enhancing conversion metrics. That means getting users to perform certain actions, such as form submissions, clicks, video views, or purchases/sales.

From a broader perspective, businesses derive the following main benefits from using this research method:

Data-driven decision-making

A/B tests provide valuable data that companies can use to make strategic decisions and increase their chances of growth. This approach also reduces risk by allowing businesses to prepare in advance for potential issues that might affect them down the line.

Revenue growth

We’ve already talked about how split tests can boost conversion rates. In turn, higher conversion rates can lead to greater revenue for the business.

Improved customer satisfaction

Testing sometimes reveals user pain points that you can address to ensure customers are more satisfied with your website, product, or marketing assets.

We could rave all day about the importance of knowing how to do A/B testing, but I think you got the idea. Let’s learn more about the process itself.

IV. How A/B Testing Works

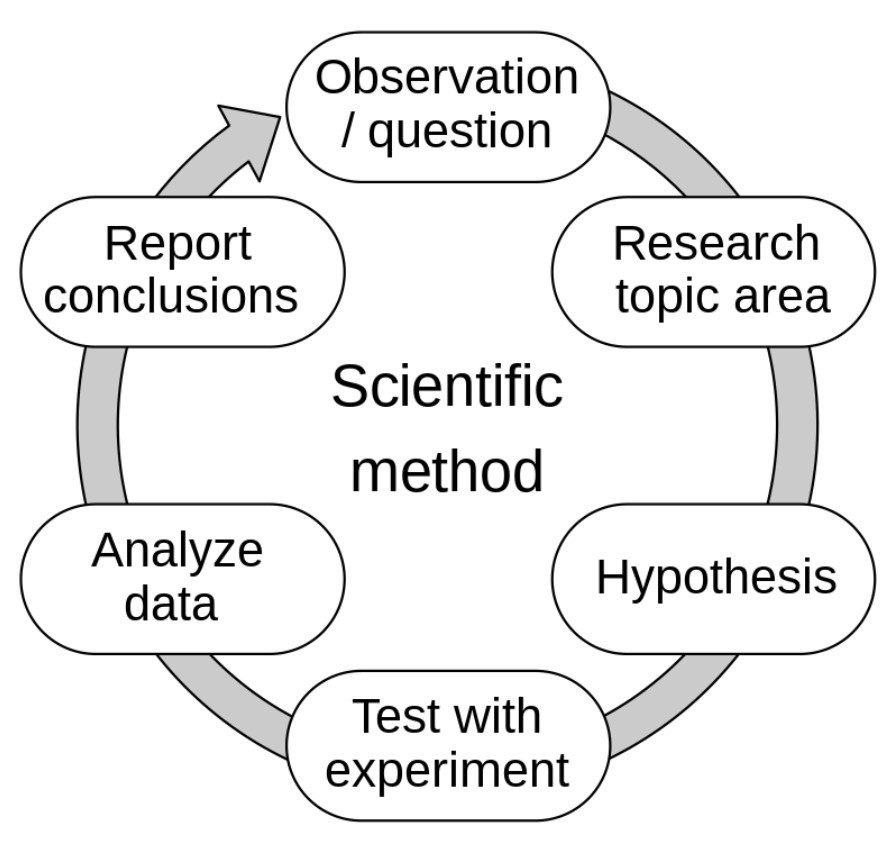

A/B testing is much like the scientific method, which most of us learned about in school.

Yes, the scientific method serves the greater goal of helping us understand the world better, while A/B testing merely aims to improve conversion rates and user experience. But we can’t ignore the similarities.

Both research methods involve testing a hypothesis through an experiment and then using statistical analysis to draw conclusions from the data.

In a nutshell, the A/B testing process goes like this:

Form a hypothesis and set a goal → Create variations to test → Randomly split traffic → Collect data → Analyze the results → Implement the winning version → Repeat the process

The A/B test needs to run for a specific time frame and have a certain sample size to collect enough data and produce statistically significant results. But we’ll see how you determine these two things a bit later.

V. The Different Types of A/B Testing

There are three main types of A/B tests you can perform. Let’s take a closer look at each of them:

1. Split testing

This is the most widely used form of A/B testing, in which two variations of something (email subject, ad creative, webpage, you name it) are shown to users to compare performance.

It’s important to mention that only one variable differentiates the two variations.

Take the ads below. They are precisely the same, except for the image. That is the single element being tested.

Due to having only one variable, split tests deliver reliable results within short time frames with relatively small sample sizes.

2. Multivariate testing

This is a type of experiment where you test more variables simultaneously.

It’s more complex than a split test because you need to make a higher number of assets, each with multiple elements modified at once. The goal is to find out which combination of changed variables brings the best results.

Check out the example below.

You might notice there’s an ad creative for every possible combination of the two variables (call-to-action button color and text), which makes these tests full factorial experiments.

Multivariate testing does come in handy when you suspect changing multiple elements might increase performance. However, you need to divide your sample between all of the creatives, meaning you would need a larger audience or a longer test runtime to gather enough traffic and reach statistically significant results.

This is why many marketers prefer to run consecutive A/B tests and test variables one by one rather than run a multivariate test.

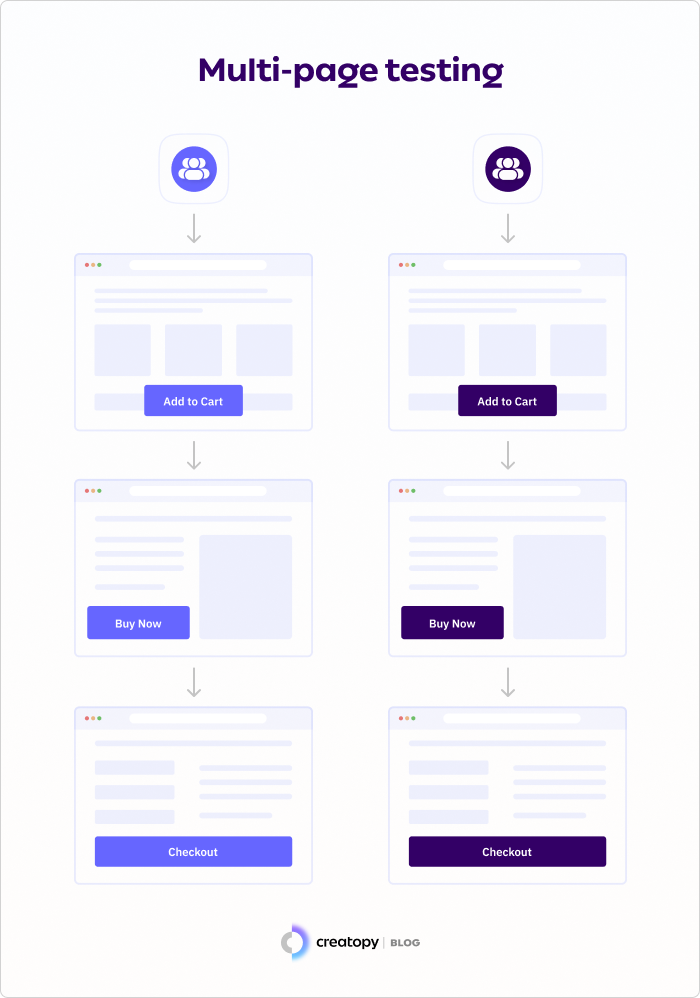

3. Multi-page testing

As the name suggests, this testing technique is mainly used for web pages. You can think of it as a sequence of A/B tests run simultaneously on different pages, with changes to the same elements.

Let’s say you have an online resume-building software, and the registration flow doesn’t bring in as many trials as it should.

You suspect the color of the call-to-action buttons might be at fault, so you decide to test the current color, blue, against a purple shade that will probably create a better contrast with the area around the buttons.

With this in mind, you create two variations of the pricing page, the subsequent registration page, and the final page that lets them know the sign-up process is complete and prompts them to start using the product.

Users see call-to-action buttons that are blue or purple on all three pages, depending on the audience segment they are in.

The example above is also a funnel test as it involves multiple pages of the sales funnel, but this doesn’t always have to be the case.

You can do multi-page testing by, let’s say, comparing the performance of product pages with customer testimonials to that of product pages without. It’s up to you.

VI. Key Metrics Used in A/B Testing

When performing A/B tests, you usually have one primary metric by which you measure the success or failure of your variations. This metric depends on the nature of your test, a.k.a. what you’re testing, and your goal, a.k.a what you want to achieve through the test.

Besides the primary metric, you can also look at secondary metrics for additional insights.

Suppose you are running A/B tests for ad creatives. Here’s what you could focus on:

- Clicks → the number of users who have clicked on your ad;

- Cost per click → how much a click on your ad has cost you;

- Clickthrough rate → the percentage of the number of times users clicked on your ad;

- Conversion rate → how many users clicked on your ad and then converted.

If you are testing a website page or an ad campaign landing page, you might want to keep an eye on one or more of these performance indicators:

- Unique visitors → the number of users who have visited your web page during the test;

- Return visitors → the number of users who have visited your website before that land on your webpage;

- Time on page → how much time users spend on the webpage;

- Conversion rate → how many users clicked on a link from the webpage and converted;

- Bounce rate → how often users leave the webpage without performing any action;

- Exit rate → how often users exited the webpage after having previously visited other pages of the website in the same session.

For email marketing, you want to track the following A/B testing metrics:

- Open rate → how many users open your email;

- Clickthrough rate → how many users click on a link within your email;

- Conversion rate → how many users clicked on a link within your email and converted.

I think that’s enough theory for now. Let’s see how you can put things into practice.

VII. How to Conduct an A/B Test

A/B testing includes more than the actual testing and data-collecting part. It actually requires careful planning and execution. Before the test run, you need to make preparations; after, you need to analyze data and draw conclusions.

The steps below cover all the stages of the process, providing a complete roadmap for how to do a split audience experiment with one or more variables.

1. Form a hypothesis and identify variable(s) to test

What you must do is analyze the performance data you currently have on your website, emails, ads, or whatever it is you want to test.

By definition, the hypothesis is simply a supposition that must be tested to be proven true or false, and it stems from making observations.

From a different angle, you need the hypothesis to kickstart your A/B test.

Let’s say you want to do an A/B test for a display ad campaign, and your hypothesis sounds like this:

Observation: Not many viewers are clicking my campaign’s ads.

Hypothesis: If the background of my creatives were black instead of white, the number of clicks would go up.

The hypothesis will reveal the variable you should test. Here it’s the background of the ad creatives. But, depending on what you’re working with, it might be something else.

Earlier, I mentioned it’s possible to test multiple variables at once, which is commonly known as a multivariate test. In this scenario, you’d have to identify more variables to experiment with.

2. Create variations

Considering our example, you need to create a new version of your ad design (with a black background) and then test it against the initial one (with a white background).

This is exactly what our PPC team did with the ads below promoting one of our free webinars.

(If you’re curious, the first ad creative generated 152.17% more landing page views for us than the second one.)

Version A of the ad—the initial one, is called the ‘control’ variable, while the B version is known as the ‘variation’, ‘variant’, or ‘challenger’.

If you’re testing email subject lines, you would have two versions of the text. For landing pages, two versions of the layout/content on the page. And the list goes on.

For multivariate testing, you can use this formula to calculate the number of test variations needed:

No. of variations on variable A × No. of variations on variable B × … × No. of variations on variable n = Total no. of variations

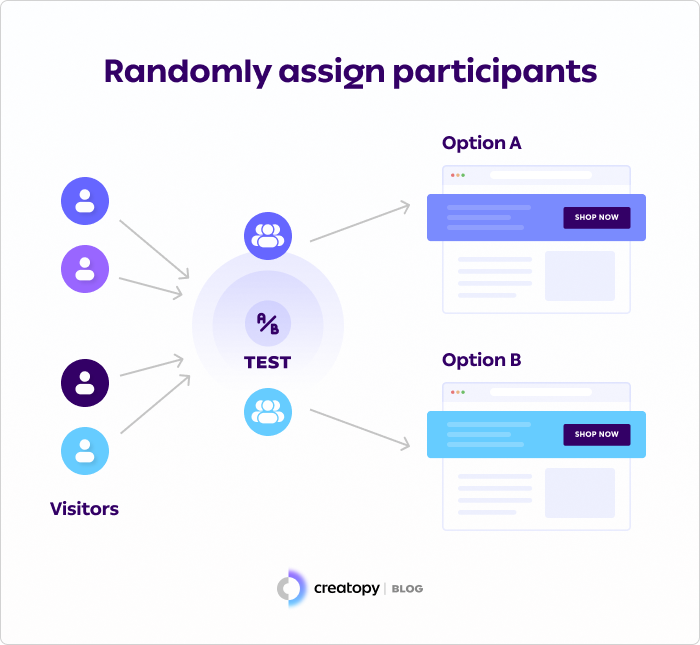

3. Randomly assign participants

The test participants should be assigned randomly to one control group and one (or more) test group(s) to ensure impartial outcomes.

On the matter of how to split traffic for A/B testing, we recommend you divide the audience 50/50 for optimal results. Still, it’s also possible to split it unevenly, for instance, 80/20 or 70/30.

Whichever method you choose, you need to make sure both subsets meet the minimum number of people needed to achieve statistical significance.

How to determine which is that number? You might ask.

If you’re not enthusiastic about doing the math to find out the required number of participants (I don’t blame you because neither am I), don’t sweat it. Just use an online sample size calculator. Most A/B testing software has this feature built-in, so you don’t even have to go looking for an auxiliary tool.

Alternatively, you can get a data scientist or analyst to help you calculate it for you.

If you’re dealing with a limited audience like an email contacts list, you must first ensure it’s big enough to run an A/B test. In email marketing, for example, you would need audience segments of at least 1,000 recipients.

For indefinite audiences like the ones in display advertising, the sample size is influenced by the run time of the test. The longer it lasts, the more traffic it gathers.

4. Track and measure results

You shouldn’t launch your A/B test, then wait for it to complete its run to check the results at the very end. Quite the opposite—you must track and measure results in real-time during the test run as well. Here is where the predefined key metrics we talked about toward the beginning of the article come into play.

This prevents costly fixes and unwanted do-overs of the experiment. Let’s assume that maybe you didn’t properly set up the test. Unless you monitor data constantly, you might realize that only at the end, when a do-over is required.

Besides, in some cases, you need to check whether you’ve met the sample size number you require for significant results to know whether to extend the test duration or not.

Many A/B testing platforms have built-in analytics to help you track all relevant metrics and KPIs.

For display ads experiments, we recommend you integrate each A/B test with Google Analytics, regardless of the primary tool you use. By doing this, you’ll have a more comprehensive view of the campaign’s performance. Besides, harnessing test data from multiple sources makes for more reliable results.

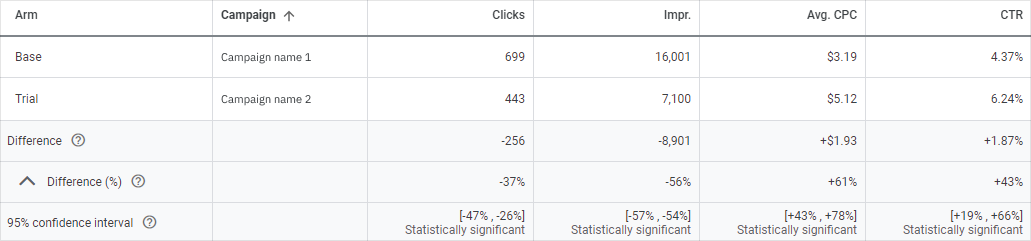

5. Analyze data and interpret the results

Once the test has concluded, you must analyze the A/B test report and determine if there is a difference in performance between the test variations. Moreover, it needs to be statistically significant, which is just the sciency way of saying it can be attributed to your variable, and it’s not just a matter of chance.

Confidence level plays an important role in determining which variation was more successful. This is a percentage that indicates how confident you are that the conclusion of the test is correct.

For example, your variation can have an increase in conversion compared to the original. However, this is not enough to declare it the winner of the test. You must also check if it has a high probability of beating the control version, a.k.a the confidence level.

In A/B testing, common confidence levels are usually over 85%. Still, how high or low this percentage is depends on your risk tolerance.

You can calculate all this the old-school way if you want to, but you don’t have to know any complicated formula by heart. We recommend you use one of many statistical significance calculators available online to make it easier and eliminate the risk of human error.

In the end, your test can be successful, unsuccessful, or inconclusive.

If the data from the A/B test proves your hypothesis is wrong, you shouldn’t be discouraged. It simply means you can rule out this one variable. This leads me to the next point.

6. Test regularly

You should continue testing regardless of the outcome of your experiment. I mean, look at it this way:

- If your result is successful, you can test other variables and improve performance even further;

- If your result is negative, you need to run another experiment and find variables that will actually uplift your metrics;

- If your result is inconclusive, well, this one is self-explanatory—a redo of the test is called for.

On the same topic, you shouldn’t do experiments only when things go wrong.

There is always room for improvement. Regularly performing A/B tests can ensure continued success or help you reach an even higher level of performance. The worst thing you can do is give in to the “if it ain’t broke, don’t fix it” mindset.

You can even create an A/B testing calendar to make sure you turn regular experiments into a priority.

VIII. Best Practices for A/B Testing

I’m sure by now you understand that this type of experiment requires meticulous preparation. This also means there is a risk of making errors and mistakes. Ideally, you want to avoid bias and ensure accurate results.

Luckily, A/B tests are so widespread that a series of best practices have been refined and verified over time. In other words, many have walked so you could run (A/B tests).

Let’s go through the most important things you should consider when designing your experiment:

Establishing clear goals

Goals not only help you set up your A/B test, but they are also vital to measuring success or failure. Don’t wait until you’ve begun testing to think about what you want to achieve. You want to have a clear direction laid out before you from the start, otherwise, your test might be rendered useless.

Running A/A tests first

An A/A test is just like an A/B test, with one key difference: the latter tests two identical pages against each other. You might wonder what’s the purpose of such an experiment. Basically, it’s a trial run to ensure that the test is set up correctly and that the testing platform works properly before the actual A/B test.

Testing only one variable at a time

If you’re not running a multivariate test, make sure you modify a single, isolated variable in one go. Why is this so important? Because having more variables will make it hard to understand what went right or wrong.

Conducting A/B tests often

I might be repeating myself here, but many businesses make the mistake of using A/B tests only when things go wrong with their website/emails/ad campaigns. If you’re not testing regularly, let’s just say you’re missing out on many opportunities to improve your marketing efforts and user experience.

Daring to take risks

Sometimes marketers can be scared that A/B testing might have a negative impact on performance, especially when it comes to digital assets that are already doing pretty well. Suppose your fears come true, and your metrics drop for your challenger variation. You can always hit the stop button and test a different variable instead, one that might actually render positive test results.

Now we’ve got the dos covered, it’s time to go over the don’ts. Make sure you steer clear of the following common pitfalls when conducting A/B tests:

Using insufficient sample sizes

If you don’t have a large enough A/B testing sample size, your test may produce invalid results. Make sure you always perform sample size calculations beforehand. If you’re on the lower side and still decide to perform the test, there’s a risk you’ll waste part of your budget on a test that might be inconclusive. Another option is to wait until you have a larger pool of users to start the experiments.

Cutting the experiment too short

The common consensus is that you should run an A/B test for a minimum of one to two weeks, but this depends on what you’re testing. With email marketing, for example, you might get conclusive results in a matter of hours or days. Display ad A/B tests, in contrast, require more time to get a representative sample and gather enough data.

Looking at too many metrics

Don’t get me wrong, I’m not saying you should not check secondary metrics at all. In fact, you might remember I mentioned the opposite earlier in the article. Still, when calculating statistical significance, you should only take your primary metric into account. Measuring the success of your test based on other metrics will increase the risk of false positives.

Having unrealistic expectations

I’m going to be honest with you: not every A/B test you run will boost your conversions or revenue. Actually, most of them won’t, and that’s completely fine. Google and Bing have previously reported only 10% to 20% of their experiments to have positive outcomes. But while many A/B tests won’t significantly impact your metrics, they will teach you something new about your audience, which is a win in itself.

IX. How to Do A/B Testing in Creatopy

If you use display ads to promote your business or the businesses of your clients, then you know A/B testing offers valuable data that you can use for campaign optimization.

Well, we have good news for you. In addition to ad creation, the Creatopy platform includes the A/B testing functionality alongside many other tools for creative optimization.

For those of you who prefer video content, this short tutorial will teach you everything you need to know to conduct A/B testing in Creatopy successfully.

However, I’ll also quickly explain the process here.

You can experiment with testing various elements in your creatives, including text, background, logo, and colors for the call-to-action button. What we recommend, once again, is changing one component at a time so you know which one influences the effectiveness of your ads.

Creatopy’s A/B testing solution allows you to simply choose which creatives to include in your A/B test and deliver your campaign to your desired audience in just a few clicks. The only requirement for starting an A/B test is to select at least two and no more than five creatives, which must have the same size.

You can easily add and remove creatives from the test, and you also have the option to add a fitting name and description for your test to keep all your projects organized.

The beauty of using Creatopy is that you don’t need to go through the traditional process of downloading your creatives and uploading them to your desired ad networks to run your A/B test. With our ad-serving tools, you can simply choose your preferred ad networks, and we’ll generate an ad tag code for your A/B test, which you can then further import to your desired platforms.

Working with ad tags has the benefit of allowing you to update your creatives at any point in real time, and not having to worry about any size limitations before uploading them to the ad network.

Our platform will collect the data as soon as you launch your ads in your chosen ad networks. You can check the status of your test at any point and track the results that are being produced, such as CTR and impressions, in detailed analytics dashboards.

An A/B test can run anywhere from 7 to 30 days, and if one of the creatives reaches a win probability of 85%, the test will automatically end, and the winning creative will continue to be served. You can also end the test manually if you think that it rendered enough results for you to make a decision.

How can marketers and designers collaborate on A/B testing

When it comes to preparing creatives for A/B testing campaigns, designers have to spend a significant amount of time building the necessary variations. Usually, brands and agencies alike will have multiple A/B tests running at the same time, requiring the creative team to double their efforts.

With Creatopy, designers can easily prepare custom-branded templates for the team, which can be used as a starting point in various A/B testing campaigns. As such, marketers can start an A/B test using a pre-made template and change the creative components as they see fit for each particular A/B test without having to work with a designer. This means a faster A/B testing process, more autonomy for marketers, and more time for designers to bring their creativity into play.

Final Thoughts

Knowing how to do A/B testing helps digital marketers (as well as other professionals) understand customers and make data-driven decisions that lead to better business outcomes.

It’s easy for any company to get started with A/B testing, especially since there are plenty of tools out there that can automate at least part of the process, Creatopy included.

It’s true most of the A/B testing tools on the market rely heavily on cookies, which, as we know, are soon to be phased out. This raises concerns about the future of testing since users who visit a website multiple times would be perceived as new users, resulting in unreliable findings.

The solution could lie in technologies like server-side testing that allow user data to be stored as first-party cookies. But that’s a discussion for another day. Until then, having an A/B testing marketing strategy remains vital.